Intel Builds the World’s Largest Neuromorphic System

Intel has built the world's largest neuromorphic system code-named Hala Point.

NVIDIA took the wraps on its new AI platform, Blackwell. It should enable organizations to build and run real-time generative AI on trillion-parameter large language models at up to 25x less cost and energy consumption than its predecessor.

The Blackwell GPU architecture features six transformative technologies for accelerated computing. They will, the company said, help unlock breakthroughs in data processing, engineering simulation, electronic design automation, computer-aided drug design, quantum computing, and generative AI.

“For three decades we’ve pursued accelerated computing, to enable transformative breakthroughs like deep learning and AI,” said Jensen Huang, founder and CEO of NVIDIA. “Generative AI is the defining technology of our time. Blackwell is the engine to power this new industrial revolution. Working with the most dynamic companies in the world, we will realize the promise of AI for every industry.”

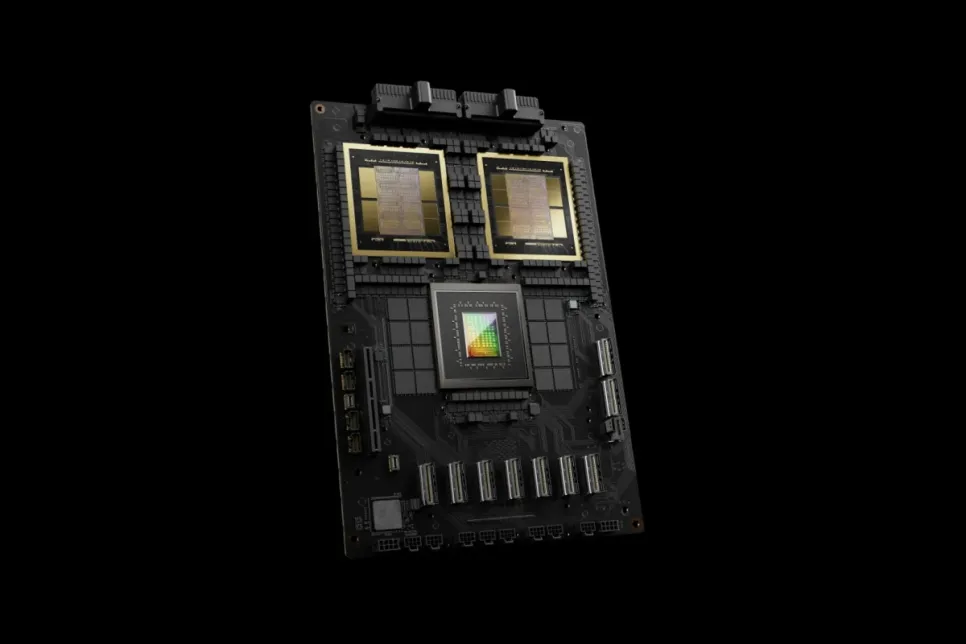

The NVIDIA GB200 Grace Blackwell Superchip connects two B200 Tensor Core GPUs to the Grace CPU over a 900GB/s ultra-low-power NVLink chip-to-chip interconnect. For the highest AI performance, GB200-powered systems can be connected with the Quantum-X800 InfiniBand and Spectrum-X800 Ethernet platforms, which deliver advanced networking at speeds up to 800Gb/s.

The GB200 is a key component of the NVIDIA GB200 NVL72, a multi-node, liquid-cooled, rack-scale system for the most compute-intensive workloads. It combines 36 Grace Blackwell Superchips, which include 72 Blackwell GPUs and 36 Grace CPUs interconnected by fifth-generation NVLink. Additionally, GB200 NVL72 includes BlueField-3 data processing units to enable cloud network acceleration, composable storage, zero-trust security, and GPU compute elasticity in hyperscale AI clouds.

The GB200 NVL72 provides up to a 30x performance increase compared to the same number of NVIDIA H100 Tensor Core GPUs for LLM inference workloads and reduces cost and energy consumption by up to 25x. The platform acts as a single GPU with 1.4 exaflops of AI performance and 30TB of fast memory and is a building block for the newest DGX SuperPOD. NVIDIA offers the HGX B200, a server board that links eight B200 GPUs through NVLink to support x86-based generative AI platforms. HGX B200 supports networking speeds up to 400Gb/s through the Quantum-2 InfiniBand and Spectrum-X Ethernet networking platforms.