Qualcomm Announces Snapdragon 8 Gen 5 Platform

Qualcomm added its latest smartphone platform to its premium-tier Snapdragon range.

GPUs have been called the rare Earth metals, even the gold, of artificial intelligence. The reason is that they’re foundational for today’s generative AI era.

Three technical reasons, and many stories, explain why that’s so. Each reason has multiple facets well worth exploring but at a high level. GPUs employ parallel processing, systems built on them scale up to supercomputing heights, and the GPU software stack for AI is broad and deep.

The net result is GPUs perform technical calculations faster and with greater energy efficiency than CPUs. That means they deliver leading performance for AI training and inference as well as gains across a wide array of applications that use accelerated computing.

In its recent report on AI, Stanford’s Human-Centered AI group provided some context. GPU performance has increased roughly 7,000 times since 2003 and price per performance is 5,600 times greater, it reported. The report also cited analysis from Epoch, an independent research group that measures and forecasts AI advances.

“GPUs are the dominant computing platform for accelerating machine learning workloads, and most (if not all) of the biggest models over the last five years have been trained on GPUs … [they have] thereby centrally contributed to the recent progress in AI,” Epoch said on its site.

A 2020 study assessing AI technology for the U.S. government drew similar conclusions. “We expect [leading-edge] AI chips are one to three orders of magnitude more cost-effective than leading-node CPUs when counting production and operating costs,” it said.

A brief look under the hood shows why GPUs and AI make a powerful pairing. An AI model, also called a neural network, is essentially a mathematical lasagna, made from layer upon layer of linear algebra equations. Each equation represents the likelihood that one piece of data is related to another.

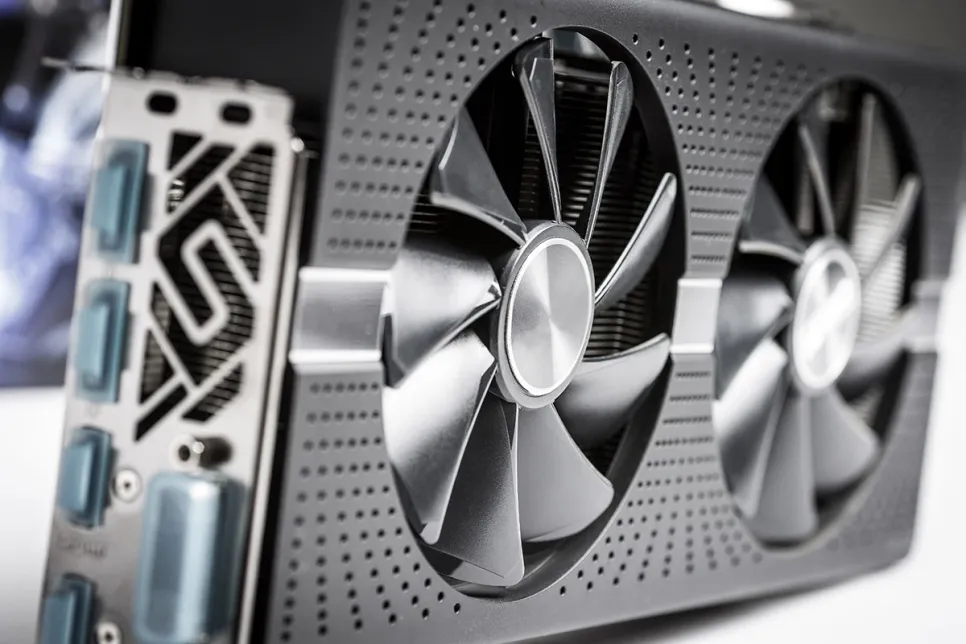

For their part, GPUs pack thousands of cores, tiny calculators working in parallel to slice through the math that makes up an AI model. This, at a high level, is how AI computing works.

The complexity of AI models is expanding a whopping 10x a year. The current state-of-the-art LLM, GPT4, packs more than a trillion parameters, a metric of its mathematical density. That’s up from less than 100 million parameters for a popular LLM in 2018.

GPU systems have kept pace by ganging up on the challenge. They scale up to supercomputers, thanks to their fast NVLink interconnects and NVIDIA Quantum InfiniBand networks. For example, the DGX GH200, a large-memory AI supercomputer, combines up to 256 NVIDIA GH200 Grace Hopper Superchips into a single data-center-sized GPU with 144 terabytes of shared memory.

Each GH200 super chip is a single server with 72 Arm Neoverse CPU cores and four petaflops of AI performance. A new four-way Grace Hopper systems configuration puts in a single compute node a whopping 288 Arm cores and 16 petaflops of AI performance with up to 2.3 terabytes of high-speed memory. And NVIDIA H200 Tensor Core GPUs announced in November pack up to 288 gigabytes of the latest HBM3e memory technology.

An expanding ocean of GPU software has evolved since 2007 to enable every facet of AI, from deep-tech features to high-level applications. Many of these elements are available as open-source software, the grab-and-go staple of software developers. More than a hundred of them are packaged into the NVIDIA AI Enterprise platform for companies that require full security and support. Increasingly, they’re also available from major cloud service providers.