Infobip and Haas Unveil Interactive Fan Quiz

Infobip is partnering with the MoneyGram Haas F1 Team on an innovative digital fan engagement campaign, designed to bring racing fans closer to the action.

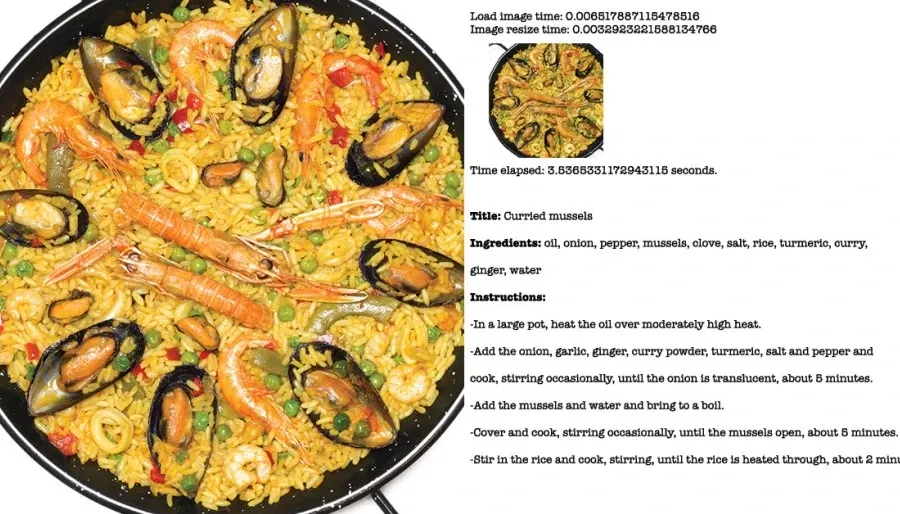

Facebook AI researchers have built a system that can analyze a photo of food and then create a recipe from scratch. Snap a photo of a particular dish and, within seconds, the system can analyze the image and generate a recipe with a list of ingredients and steps needed to create the dish.

The system can’t look at a photo of a particular pie or pancake and determine the exact type of flour used or the skillet or oven temperature, but the system will come up with a recipe for a very credible approximation. While the system is only for research, it has proved to be an interesting challenge for the broader project of teaching machines to see and understand the world.

Their “inverse cooking“ system uses computer vision, technology that extracts information from digital images and videos to give computers a high level of understanding of the visual world. It leverages not one but two neural networks, algorithms that are designed to recognize patterns in digital images, whether they are fern fronds, long muzzles or embossed characters.

Michal Drozdzal, a Research Scientist at Facebook AI Research, explains that the inverse cooking system splits the image-to-recipe problem into two parts: One neural network identifies the ingredients that it sees in the dish, while the other devises a recipe from the list. Drozdzal says this enhanced computer vision system is more effective than retrieval image-to-recipe techniques, which work to recognize the tasty treat in question and then search a database of preexisting recipes.

“Our system outperformed the retrieval system both on ingredient predictions and on generating plausible recipes,“ says Drozdzal. The proof is in the paella: Drozdzal and fellow Facebook AI Research scientist Adriana Romero, who met while studying for doctorates at the University of Barcelona, claim their system might even trot out a decent recipe for the Spanish rice dish.

That is no mean feat, because food recognition is one of the toughest areas of natural image understanding. Food comes in all shapes and sizes, what AI scientists call “high intraclass variability,“ and changes appearance when it’s cooked. Take an onion, which could be white, yellow or red. It can be sliced into rings, slivers or chunks. You could bake, boil, braise, grill, fry or roast an onion, or just choose to eat it raw. A sautéed onion will be translucent, but sizzle the vegetable in butter, sugar and balsamic vinegar and you get brown manna from heaven: caramelized onions.

Just like an onion, there’s another layer of complexity: The vegetable is certainly present in paella, stews and curry, but it is often invisible to the naked eye. This is why any system of visual ingredient detection and recipe generation benefits from some high-level reasoning and prior knowledge: A standard paella contains some quantity of chopped and fried onion, a cake will likely contain sugar and no more than a pinch of salt, and a croissant will presumably include butter.

Previous image-to-recipe programs were a bit more simple in their approach. In fact, they thought more like gopher librarians than like Le Cordon Bleu chefs. Drozdzal explains that these less sophisticated systems merely retrieved a recipe from a fixed data set based on the similarity of the photo to the images on file: “It was like having a photo of the food and then searching in a huge cookbook of pictures to match it up.“

The research project will have educational as well as epicurean benefits. “The food that we consume nowadays has changed from being home-cooked to takeout, so we’ve lost the information about how the food was prepared,“ says Romero. And it is not just foodies who stand to benefit from the principle of conditional generation, or neural networks that generate outputs from two modalities: the image and the inferred text.

In the meantime, the inverse cooking creators are continuing to fine-tune the system. “Sometimes it can’t predict an ingredient, which means that it won’t be present in the recipe,“ says Drozdzal. They also want to train the system to deal with the problem of visually similar foods, whether they’re spaghetti and noodles, mayonnaise and sour cream, or tofu and paneer. Romero adds that she and Drozdzal still haven’t taken the final, most important step in their inverse cooking system: “We haven’t got around to cooking yet.“