Infobip and Haas Unveil Interactive Fan Quiz

Infobip is partnering with the MoneyGram Haas F1 Team on an innovative digital fan engagement campaign, designed to bring racing fans closer to the action.

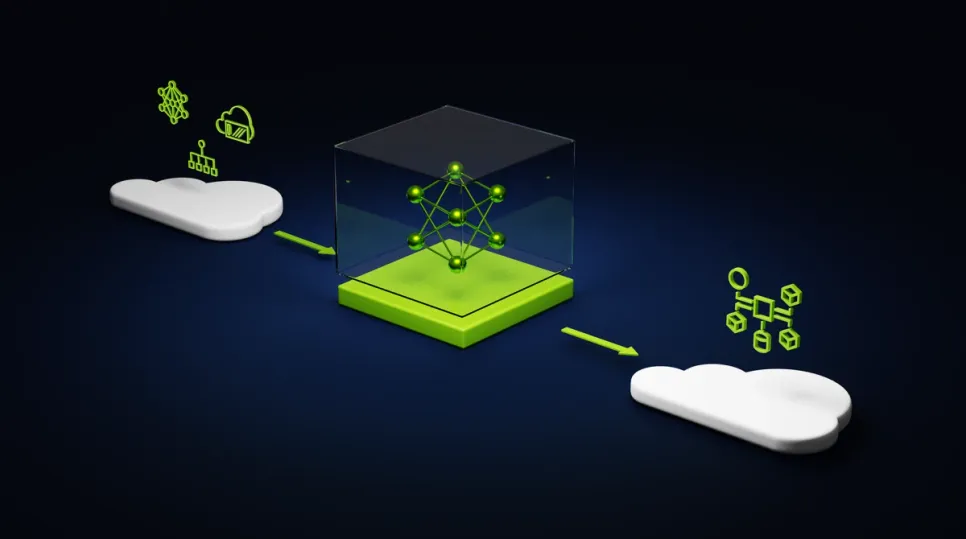

NVIDIA announced an AI foundry service to help the development and tuning of custom generative AI applications for enterprises and startups deploying on Microsoft Azure. The NVIDIA AI foundry service pulls together three elements — a collection of AI Foundation Models, NeMo framework and tools, and DGX Cloud AI supercomputing services — that give enterprises an end-to-end solution for creating custom generative AI models. Businesses can then deploy their customized models with NVIDIA AI Enterprise software to power generative AI applications, including intelligent search, summarization, and content generation.

“Enterprises need custom models to perform specialized skills trained on the proprietary DNA of their company — their data,” said Jensen Huang, founder and CEO of NVIDIA. “NVIDIA’s AI foundry service combines our generative AI model technologies, LLM training expertise, and giant-scale AI factory. We built this in Microsoft Azure so enterprises worldwide can connect their custom model with Microsoft’s world-leading cloud services.”

“Our partnership with NVIDIA spans every layer of the Copilot stack — from silicon to software — as we innovate together for this new age of AI,” said Satya Nadella, chairman and CEO of Microsoft. “With NVIDIA’s generative AI foundry service on Microsoft Azure, we’re providing new capabilities for enterprises and startups to build and deploy AI applications on our cloud.”

NVIDIA’s AI foundry service can be used to customize models for generative AI-powered applications across industries, including enterprise software, telecommunications, and media. Customers using the foundry service can choose from several AI Foundation models, including a new family of NVIDIA Nemotron-3 8B models hosted in the Azure AI model catalog. DGX Cloud AI supercomputing is available on Azure Marketplace. It features instances customers can rent, scaling to thousands of Tensor Core GPUs, and comes with AI Enterprise software, including NeMo, to speed LLM customization.