CES 2026: NVIDIA Unveils New Open AI Models for Autonomous Vehicles

NVIDIA announced the new family of open AI models, simulation tools, and datasets at CES 2026 in Las Vegas.

NVIDIA announced the new family of open AI models, simulation tools, and datasets at CES 2026 in Las Vegas. They are designed to usher in a new era for autonomous vehicles (AV).

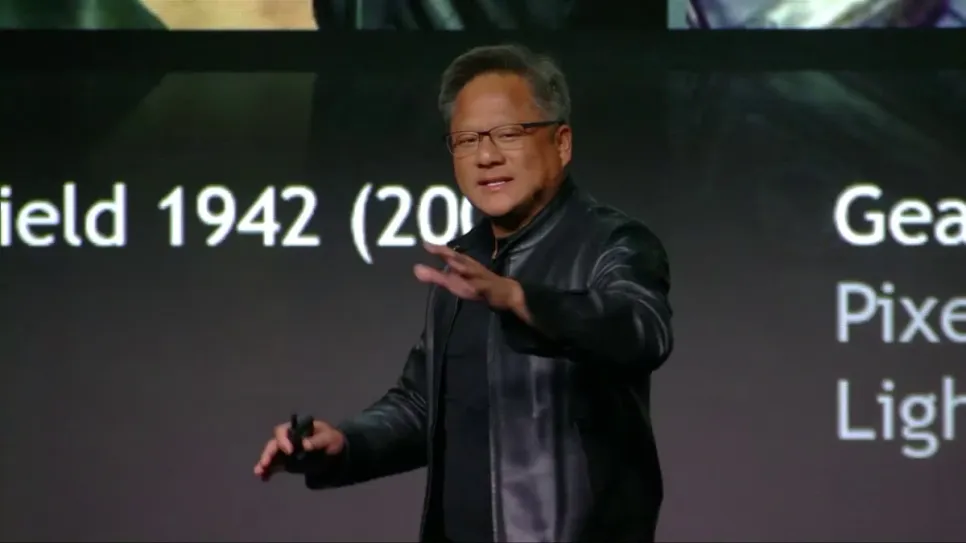

During a keynote address, Nvidia CEO Jensen Huang stated the company’s new Alphamayo family of open-source AI models is the world’s first thinking, reasoning, autonomous vehicle AI. Huang stated the goal of Alpamayo is to bring humanlike thinking to AV decision-making while also applying the same technologies to train and operate physical robots.

“Alpamayo is trained end-to-end, literally from camera in to actuation out,” he explained. “Not only does it take sensor input and activate the steering wheel, brakes, and acceleration, it also reasons about what action it is about to take.” Instead of running directly in-vehicle, Nvidia stated Alpamayo serves as a large-scale teacher model that developers can fine-tune and use in the backbones of their AV stacks.

The core of Nvidia’s new family of open-source AI models is Alphamayo 1, which is a 10-billion-parameter chain-of-thought architecture vision language action model. NVIDIA stated it allows AVs to think like a human being to solve complex traffic issues, such as a traffic light outage or an approaching pedestrian, without prior experience.

“The reason why this is so important is because of the long tail of driving,” Huang said. “It’s impossible for us to simply collect every single possible scenario for everything that could ever happen in every single country, in every single circumstance that’s possibly ever going to happen for the entire population. These long tails will be decomposed into quite normal circumstances that the car knows how to deal with,” he explained.

Alpamayo’s underlying code and data sets are available on Hugging Face. Developers can fine-tune Alpamayo 1 into smaller runtime models for vehicle development. They can also use it to build AV development tools such as reasoning-based evaluators and auto-labelling systems, which automatically tag video data. NVIDIA is releasing an open dataset with over 1,700 hours of driving data collected across a range of conditions and geographies, covering rare and complex real-world edge cases.

NVIDIA is also rolling out AlphaSim, an open‑source, end-to-end simulation framework for validating AV driving systems. AlphaSim, which is available on GitHub, provides realistic sensor modelling, configurable traffic dynamics, and scalable closed‑loop testing environments. “In the next 10 years, I’m fairly certain a very, very large percentage of the world’s cars will be autonomous,” Huang said. “But this basic technique that I just described, using the synthetic data generation and simulation, applies to every form of robotic systems.”